Discover our latest phishing data, expert insights, and strategies for navigating the European cyberthreat landscape.

Cyberthreats

The new risks ChatGPT poses to cyber security

No time to read? Listen instead:

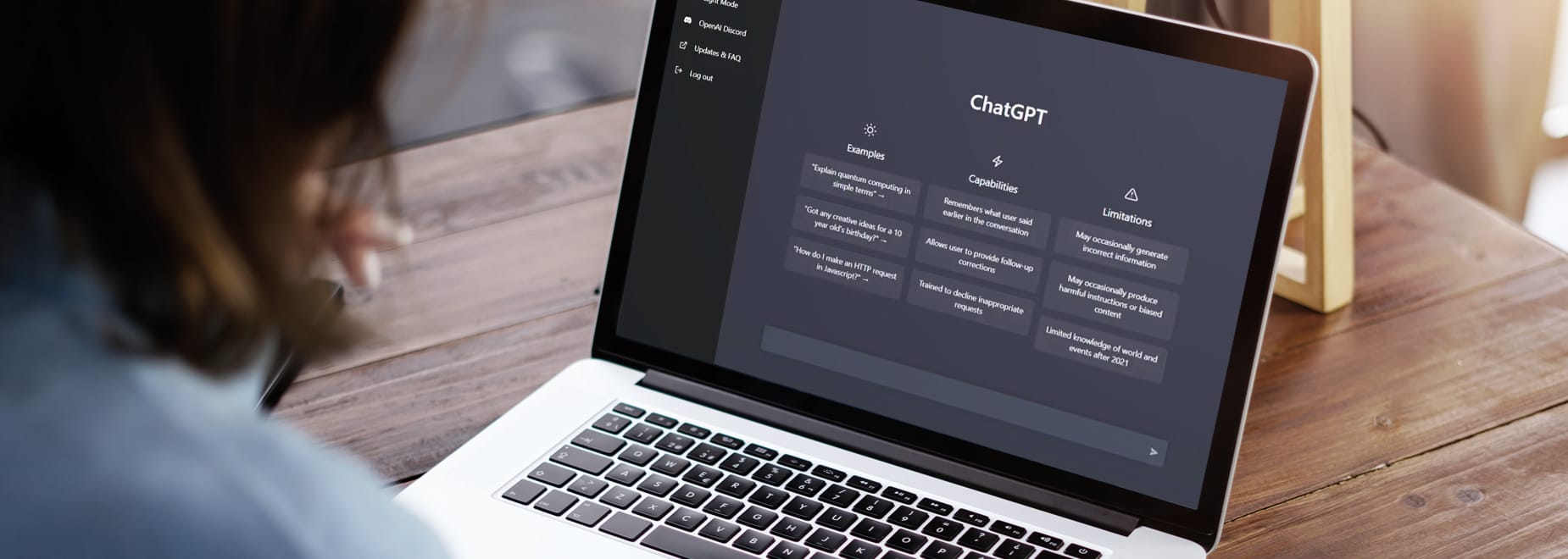

ChatGPT has become a symbol of AI’s limitless potential, igniting a technological revolution that is reshaping numerous aspects of modern life and redefining the boundaries of human-machine collabouration. Its latest version, ChatGPT-4, has not only mastered the art of generating human-like language, but it can also code in a variety of programmeming languages. As we explore the countless benefits and use cases of this application, so do hackers, who are exploiting the tool’s potential to scale their attacks by creating better phishing emails, undetectable malware, and refining their impersonation tactics.

While ChatGPT presents dangers when used by wrongdoers, other seemingly harmless uses of the tool may also pose security problems. ChatGPT was trained on a vast amount of data from several sources, but it also constantly learns from the users’ personal information and input, which may include sensitive data. This has raised many questions around the security risks that the handling of this data entails, especially regarding data privacy and copyright infringement.

However, despite many public and private organisations currently directing their attention toward these issues, we still lack official guidelines and legislation regulating the use of this tool. In light of these challenges, it is important to understand the main cyber security implications of using ChatGPT and learn how to use it safely. Read on to learn everything you need to strike the right balance between embracing innovation and minimizing security risks.

What is ChatGPT, and how does it work?

ChatGPT is an advanced AI language model designed to generate human-like text by understanding and processing natural language input from users. It has been trained on a diverse range of sources to gain an extensive understanding of various topics, and it learns by predicting and generating text, word by word, in a way that makes sense in the given context.

The model employs a machine learning technique called transfer learning, where it is first trained on a data-rich task and subsequently fine-tuned for a specific activity. During the first pre-training phase, it learns from a massive dataset that contains parts of the Internet without specific knowledge of which sources were used. It is important to note that, once trained, this model does not consult the Internet to generate its replies, which is why its knowledge is limited to the information it received during its training, with a cutoff date of September 2021.

Besides its initial training, ChatGPT is constantly improved by incorporating users’ feedback and learning from their input, a phase in which AI trainers provide conversation examples and rate the quality of model-generated responses. This contributes to improving the user experience but poses several data protection and privacy risks.

Data security in the AI era: Safeguarding users’ rights in the world of ChatGPT

The security risks associated with handling personal information from users concern three data security dimensions: data privacy and GDPR compliance, intellectual property and copyright infringement, and misinformation and biased information.

Data privacy and GDPR compliance

In its Privacy Policy, OpenAI states that they collect personal information from users, including data that the user provides, such as account information and content used as input, and also technical information derived from the use of their services, such as log data, usage data, device information, cookies, and analytics. Therefore, the collection and use of this information are subject to the General Data Protection Regulation (GDPR) requirements set out by the European Union.

Since the launch of ChatGPT, numerous European experts have highlighted concerns regarding GDPR compliance and data privacy in general, uncovering several conflict areas that range from where data is processed to its vulnerability to data breaches and hacking attacks. These concerns led European authorities to launch several investigations and, in the case of Italy, to temporarily ban ChatGPT. Here are some of the main conflict areas currently in the spotlight:

- No legal basis to collect data for individuals: At the release time of this article, OpenAI does not offer any legal document safeguarding the safe and correct use of their users’ personal information, as required by GDPR. Regarding business use, OpenAI offers a Data Processing Addendum that is only applicable to their business service offerings (API for data completion, images, embeddings, moderations, etc.) but not for customer services like ChatGPT or DALL-E.

- Impossibility to comply with the “Right to be forgotten”: Article 17 of GDPR states the right of the individual to request the deletion of their information if it is no longer being used for the purpose that it was initially collected. Large language models, such as ChatGPT, cannot completely erase data points once they have learned from them. They can only give them less weight so that this data is used less often. Besides, since data is constantly being used to produce new responses, which may later be distributed and used for different purposes, it is nearly impossible to erase all traces of that information.

- Vulnerability to data breaches and attacks: Storing massive amounts of data in large servers is not exempt from risks, as a recent incident confirmed. A bug in ChatGPT granted many individuals access to other users’ queries, log-in emails, payment information, and telephone numbers. While the consequences of this incident were relatively minor, experts warn that hackers can also leverage this massive data collection to cause devastating data breaches through advanced techniques like reverse engineering, which allows the attacker to trace the chat’s output back to the user’s personal information.

- ChatGPT’s use of collected data may violate other sites’ terms of use. The data used to train ChatGPT was collected by scraping hundreds of thousands of internet sites with their own terms of use, some of them banning the commercial use of information. Some experts consider ChatGPT a commercial product, meaning unlawfully using the collected data.

Intellectual property and copyright infringement

Intellectual property and copyright infringement is also a troubling aspect of ChatGPT’s output. OpenAI’s Terms of Use assign to the user the ownership of the output and states that the content it produces is original – although not necessarily unique. Taking this into account, the commercial use of the chat’s output would be possible, but more aspects need to be considered, such as the data it was trained with and the methods used to collect it.

Beyond the obvious data privacy concerns of scraping billions of data points from sites, it is impossible to know if the output of the chat was created based on copyright-protected content, which would infringe on the rights of the owner. It is important to remember that ChatGPT does not mention the sources where the information comes from, as a human would do when writing any piece of content. Therefore, check the output for copyrighted information and consult a legal advisor before using it for commercial purposes.

Misinformation and biased information

OpenAI’s lack of transparency concerning the data used to train the tool raises many doubts about the accuracy, integrity, and objectivity of the chat’s output. As stated by OpenAI, “ChatGPT may produce inaccurate information about people, places, or facts,” which experts call “hallucinations.” These are especially concerning when they include outdated or wrong user data. According to the European GDPR, users have the right to modify or delete their information if it’s not correct. However, ChatGPT does not currently offer users the option of removing or deleting their information, meaning users do not have control over their own data. Since the chat’s output is based on the information it was trained with, there is also the risk of biased responses that, without correction, could perpetuate social stereotypes.

As a result, ChatGPT is capable of generating false statements, which could be used to maliciously or unintentionally spread misinformation and fake news. Moreover, as the use of ChatGPT becomes widespread, the risk of individuals dangerously relying on ChatGPT to solve certain sensitive matters, such as legal disputes or medical conditions, dramatically increases. It is important to remember that, even if this tool can be very helpful for a great variety of uses, it is better to consult with professionals regarding sensitive topics where a false statement could lead to serious consequences.

The amplifying effect of ChatGPT misuse on cyber security threats

The data security risks of ChatGPT are only one part of the story. As society acclimates to harnessing ChatGPT’s full capabilities, cybercriminals – even those with little technical expertise – are also adapting and finding new ways to exploit this technology to their advantage.

Despite the restrictions imposed by OpenAI to prevent the unlawful use of their application, hackers have managed to circumvent them, using ChatGPT to fuel their malicious objectives and further scale their attacks. This fraudulent use of ChatGPT is bound to negatively impact the cyber threat landscape, according to 74 percent of IT professionals surveyed by SoSafe.

Some of the most concerning applications of ChatGPT are related to coding malware, crafting very well-written phishing emails in mass, and using it in impersonation and catphishing scams.

Coding malware

ChatGPT has been employed in the development of harmful software, including the generation of encryption scripts with potential applications in ransomware attacks and the refinement of pre-existing malware code. Perhaps one of the uses that has a greater potential to further worsen the cyber threat landscape is the ability to produce highly sophisticated “polymorphic” malware code, a type of software with very versatile code that can continuously alter its form to effectively circumvent conventional security mechanisms, thus enhancing its effectiveness in cyberattacks.

ChatGPT’s ability to streamline the code writing process worries many security experts, as it enables individuals with minimal technical expertise to create functional code. Although ChatGPT’s coding capabilities are still limited and the output usually requires some editing, this could compensate for the lack of knowledge of amateur cybercriminals and effectively democratize cybercrime.

Crafting hard-to-detect phishing emails in mass

Recent research conducted by SoSafe’s social engineering team indicates that generative AI tools can help hacker groups craft phishing emails with a minimum efficiency increase of 40 percent, making it easier for them to scale their attacks by producing phishing emails in mass. Besides, mass phishing attempts are not as easy to recognise as they used to be: ChatGPT can write skilfully tailored and well-written emails without spelling mistakes or typos in many languages, broadening their target audience and increasing the effectiveness of attacks. Recent data from the SoSafe Awareness Platform, which anonymously assessed nearly 1,500 imitation phishing attacks, showed that 78 percent of people clicked on AI-written phishing emails, with one in five clicking on malicious elements – such as fraudulent links or attachments – within the email.

Impersonation and catphishing

ChatGPT can generate convincing personas that gain the victims’ affection to ultimately obtain substantial payments or sensitive information from them.

Romance scams are already an alarmingly successful business for hackers. The US Federal Trade Commission reported that nearly 70,000 consumers fell victim to such schemes in 2022, with losses of approximately $1.3 billion. The speed and simplicity with which ChatGPT can produce coherent output impersonating someone raise concerns over the potential increase in the prevalence and effectiveness of romance scams.

To read more about the explosive impact of technological innovation on cybercrime, read our new Human Risk Review 2023 report.

Human Risk Review 2023

Best security practices to maximize ChatGPT’s potential

With all the potential risks of such a powerful tool, it is important to learn how to make use of its benefits while mitigating its hazards to the greatest extent possible. ChatGPT can be used to boost your productivity, brainstorm ideas, help you research, and a myriad of other applications, but it is essential to employ it responsibly and securely. To help you achieve this balance, we compiled a list of best security practices for using ChatGPT:

- Seek legal advice when using ChatGPT output for business purposes. Your data privacy officer will inform you about the restrictions, limitations and recommendations regarding data privacy, intellectual property, and copyright protection.

- Remember that business information is sensitive and may be confidential. Using it as input for ChatGPT may be a violation of your company’s internal policies and could violate existing contracts (or NDAs) with customers.

- If your input includes personal data, replace it with fake information. You can replace it with your real data outside ChatGPT once the output is generated.

- Check the credibility of the output. Always ensure that the chat output is accurate by doing further research to avoid spreading misinformation.

- Don’t write prompts for unethical reasons or to engage in discrimination or hate speech.

- Avoid asking the chat for medical or legal advice. ChatGPT’s output may be inaccurate or false, so always rely on professionals in these sensitive areas.

- ChatGPT now allows you to turn off the chat’s history. If you disable this option, OpenAI will not use the data contained in those chats to train their models.

What’s next? – Bridging the gap between the user’s rights and AI innovation

The rapid development of generative AI tools like ChatGPT presents both immense potential and significant security risks. As we continue to integrate these technologies into our lives, it is essential for OpenAI and European organisations to collabourate and find middle ground on critical issues, such as GDPR compliance, copyright infringement, and the spread of misinformation.

While the benefits of AI are undeniable, we must remain vigilant and address the concerns, doubts, and fears that arise in response to these advancements. It is becoming clear that dedicated regulatory authorities will need to be established in the near future. In the meantime, governments and European organisations are working to fill this gap through legislation, such as the recent Artificial Intelligence Act.

Alongside these initiatives, individuals can adhere to existing recommendations to protect their sensitive data. And companies should promote a security awareness culture that instructs employees on preserving the organisation’s confidential information. Cyber security awareness training, such as SoSafe’s, allows employees to learn how to embrace innovation and harness the capabilities of AI tools without compromising the company’s security and privacy.

FAQs

- Is ChatGPT safe for business use?

Contact your legal team before using ChatGPT for business purposes. Depending on the business type, you may need to consider different restrictions and limitations regarding data protection and copyright infringement.

- Why does ChatGPT ask for a phone number?

ChatGPT requests the user’s phone number as the second step of the two-factor authentication process that protects the account against security breaches. It also allows ChatGPT to verify that you are a human, and it can be helpful for password recovery purposes.

- Does ChatGPT record data?

ChatGPT records several types of data from the user: account information (personal information and payment details), content used as input – although you can now choose against recording it – and technical data, including usage data, device information, log data, cookies, and analytics.